Batch AuthorizedProjectsWorker calls together

Queue: authorized_projects

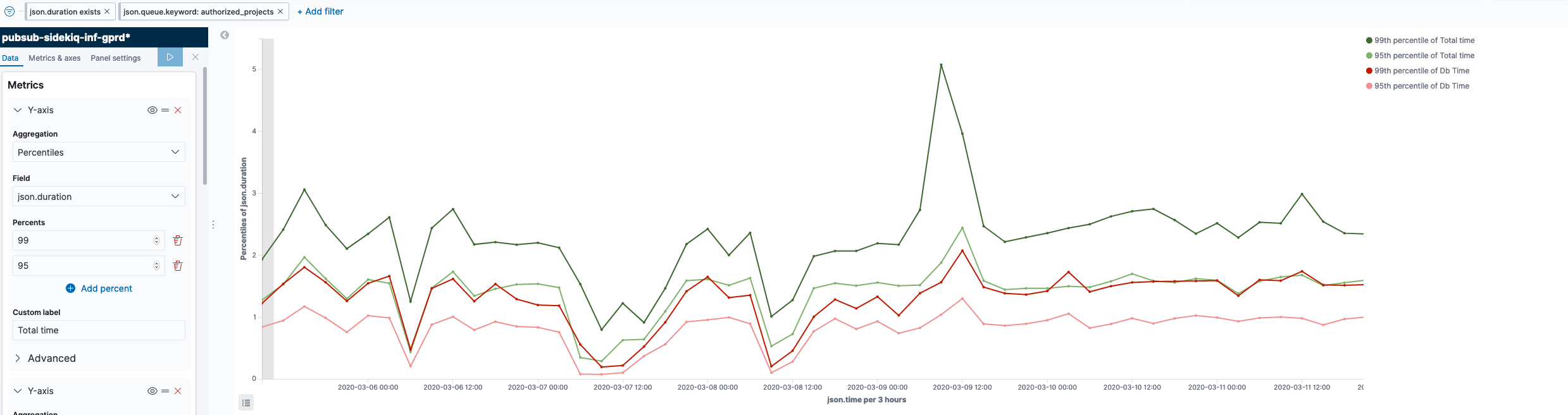

Looking at the duration over the past 7 days for this job, compared to the time it spends in the database: https://log.gprd.gitlab.net/goto/d60038d30f6ef70e231b0d99974d32f3

I believe this job is mostly database work. So processing jobs for 4 users at a time would bring that to about 8s of database time for the P99, and leaves us 2s of space for the jobs to be waited on 10s by the job waiter.

When we're iterating through users, in a BatchAuthorizedProjectsWorker we need to keep track of the users that succeeded and the users that failed to update authorizations so we can retry them.

In order to avoid getting in an infinite loop we could schedule a single job for each user that failed. I don't think there will be a lot of those jobs, since the current job only rarely fails.

For the jobs that only process a single user, we don't keep track of the failures, but instead let them raise the exception.

We should not pass the waiter on to the jobs being retried.

We should order the user ids before scheduling the batch jobs, this way we can still deduplicate them if they would be scheduled by different actions.

Issue for the proposal in #148 (closed):

Batch jobs together

As a corollary to the above, we could consider chunking jobs together. We could have a BatchAuthorizedProjectsWorker that takes, say, 100 user IDs, and performs them all in a single job. The considerations here are:

- Batch size: too small and it's not effective, too big and the worker might be too slow to be marked as latency-sensitive.

- Error handling: we'd need to track which - if any - user IDs we failed to update and schedule a new job to handle those, but we'd need to be careful to not just create an infinite loop (as it would be a new job, not retrying an existing one).